Amir Zur

ʔamir ͡tsuʁ

Hi there! I am first-year PhD student at Stanford NLP excited about understanding how AI models learn and process language.

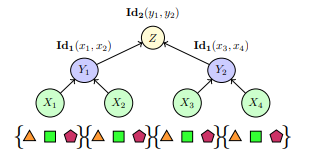

My research, advised by Dr. Christopher Potts, focuses on causally-motivated explanation and evaluation of language models.

Currently, I’m exploring how language models represent and resolve uncertainty. Can we track the decision-making process of a language model by inspecting the text it generates?

I’m always happy to chat!

news

| Sep 12, 2025 | Starting my PhD at Stanford!🌲 |

|---|---|

| Sep 20, 2024 | Our paper was accepted into EMNLP 2024 main conference! Excited to chat in Miami 😀 |

| Aug 26, 2024 | Started full-time as a Data Scientist at Microsoft! |

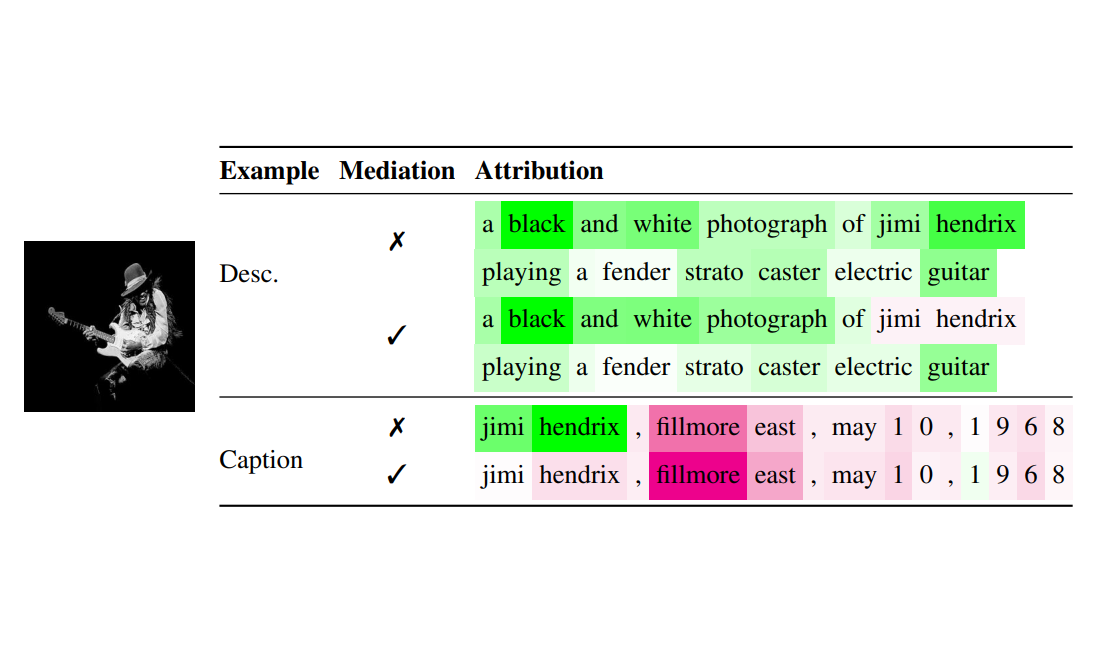

| Jun 12, 2024 | Posted our paper Updating CLIP to Prefer Descriptions Over Captions on arxiv. |